All Warfare Is Based on Deception—Troops, Vets Targeted by Disinformation Can Fight Back

Type “#MilTok” into the search bar on TikTok and you’ll be greeted with page after page after page of military videos. Soldiers trash-talking sailors. Sailors trash-talking soldiers. Military memes, uniform hacks, service members waxing long about their boot camp experience, half-dressed Army bros showing off their abs. TikTok allows for an extraordinary window into daily military life and culture, inconceivable a few decades ago.

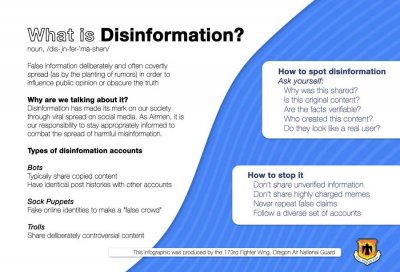

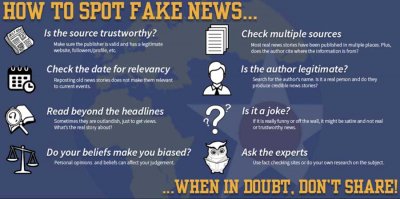

A poster describes the dangers of disinformation on social media, as well as how to detect and counter it. Graphic by Senior Airman Adam Smith, courtesy of the U.S. Air National Guard.

It’s also an extraordinary source of information broadly, the scope of which was similarly hard to predict not long ago. As content multiplies, so does misinformation. TikTok, where millions of users upload short videos, is particularly notorious for misleading content. In the second half of 2020 alone, TikTok removed or added a warning label to more than three-quarters of a million videos that showed unsubstantiated content, misinformation, or evidence of manipulation.

The military officially banned TikTok on government-issued devices two years ago. But as #MilTok makes evident, the app is popular with service members, who access it on their personal devices. This usage highlights the novelty and complexity of mis- and disinformation. Unlike more traditional threats that target military members, this one is more likely to appear while you check your phone at a bar than as you maneuver on the battlefield.

“Historically, disinformation has always been out there,” says Capt. Adam Wendoloski, an instructor at West Point who spoke to The War Horse as an expert in disinformation, rather than as a representative of West Point. “But given the way everything’s digitized now … you’re going to walk away from your job or go home from work and pull up Facebook on your phone, and chances are some of that stuff you’re looking at came from an adversary.”

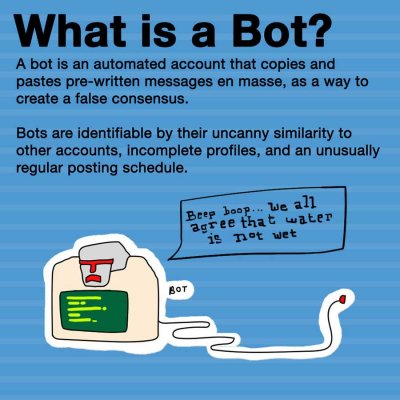

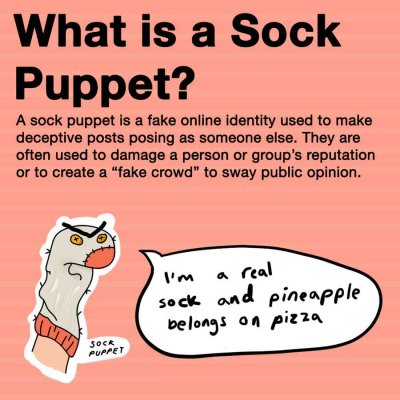

A poster explains not all social media accounts belong to real people. Graphic by Senior Airman Adam Smith, courtesy of the U.S. Air National Guard.

Countering the threat must be similarly novel and complex, and calls are increasing for policies to address disinformation that targets troops. But the military community can take fairly simple steps now—such as talking about how information campaigns typically work, understanding the research into counteracting them, and mapping out specific threat landscapes—that go a long way toward stopping mis- and disinformation from spreading. And while the military, in particular, is targeted by disinformation campaigns, its experience with identifying and combating threats also positions service members to lead the way to fight back against them.

“To me, it’s enemy contact,” Wendoloski says. “It’s no different than any other weapon on the battlefield.”

‘Why Developing a Healthy Skepticism Is Important’

In recent years, as the scope of the threat misinformation poses becomes clear, high-level efforts to prepare for and defend against it have expanded. Last year’s National Defense Authorization Act extended the authority of the Department of State’s Global Engagement Center, which was set up in 2018 to direct and coordinate the federal government’s mission to counter foreign disinformation efforts. DARPA has been working on the issue, including a 2020 project to develop technology to detect and track disinformation campaigns. And in recent years, the various services have beefed up their information-warfare efforts, standing up new units and positions dedicated to information, communications, and cyberwarfare.

But the Defense Department lacks a comprehensive strategy to address mis- and disinformation, and has paid little attention to disinformation readiness at the troop level—although last year, the Air Force added digital literacy as a foundational competency for all airmen. Airmen can complete a self-assessment of their digital literacy skills. The Joint Chiefs of Staff also offers an optional “influence awareness” course that addresses threats in the information landscape but does not go into great detail about the tools available to address them.

Information systems technicians assigned to the amphibious assault ship USS Bataan pose for a group photo with Bataan Communications Officer Warrant Officer Nkosa Morris, second from right, for Women’s History Month. Photo by Mass Communication Spc. 2nd Class Anna E. Van Nuys, courtesy of the U.S. Navy.

Misinformation experts say the size and scope of the threat misinformation poses calls for a broader defense—and that starts with beefing up the defenses of would-be targets. In an ideal world, that would happen early. In Finland, for example, which has mounted a country-wide initiative against misinformation, even young schoolchildren receive misinformation counter-messaging and training. But in the absence of a broader campaign, experts see opportunity in the military, which is particularly well-versed in providing the platforms and training to shape people in specific ways.

“People come into the military with all sorts of different beliefs and skills or lack of skills,” says Peter Singer, author of the book LikeWar: The Weaponization of Social Media and senior fellow at New America. “The military doesn’t say, ‘Well, this person already knows how to have two-factor passwords on their email. I guess I’m not going to require them to get that cybersecurity refresher training.’ We train them. It’s the same thing here.”

Some of the training showcases the many publicly available tools, like fact-checking sites such as factcheck.org, Poynter Institute’s PolitiFact, or snopes.com, which has been debunking online rumors since the 1990s.

Reverse image search engines, like TinEye, let anyone enter an image and see its origin, as well as whether it has been digitally altered. During the George Floyd protests in 2020, for instance, images circulating on social media that purported to show rioters had actually been taken earlier, in entirely different contexts.

‘Teach Students How to Think, Not What to Think’

But preparing for an incoming attack on a belief system requires awareness—not just of the steps needed to verify a piece of information, but why taking those steps is important. It also means developing a broader understanding of how information moves online and why certain posts or search results appear for certain people. This set of skills is known as digital literacy. Its emphasis isn’t on what specifically is true or false, but rather on the skills needed to evaluate the tidal wave of information we each encounter every day.

“There’s an important emphasis that needs to be made on teaching students how to think, not what to think,” says John Silva, senior director of professional learning at the News Literacy Project. “Part of that is about understanding why developing a healthy skepticism is important.”

Award-Winning Journalism in Your Inbox

Troops, of course, can be taught those skills. Organizations like the News Literacy Project and others have apps and games that teach things like how to spot misinformation and how to fact-check social media posts. Browser plugins, such as WeVerify, provide contextual information to help internet users evaluate the veracity of YouTube videos and images on social media. And myriad organizations, like Calling Bullshit, a product of two professors at the University of Washington, provide tools and reading material to help make developing a healthy skepticism fun.

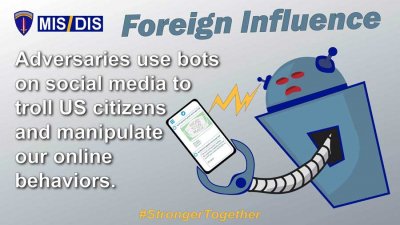

The U.S. Army created a misinformation/disinformation prevention campaign for social media in 2021. Photo by Staff Sgt. George Davis, courtesy of the U.S. Army.

The military creates highly standardized training, with a one-size-fits-all approach—a necessity given the sheer amount of information that needs to be conveyed to hundreds of thousands of service members. But given the way disinformation campaigns work, static training doesn’t make sense, says Marine Maj. Jennifer Giles, who has written about disinformation in the military. People are far more likely to incorporate the skills they learn into daily habits if the utility of those habits is clear from the beginning.

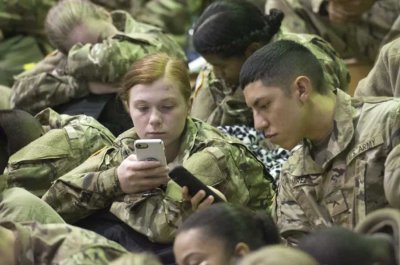

For instance, if service members encounter misinformation on TikTok, but can’t use TikTok on government-issued devices, misinformation training conducted on government devices might not obviously translate. Rather than a static, click-through course, military misinformation training needs to evolve as content does and meet troops where they are.

“People need to practice media literacy skills on the devices that they use most often,” Giles says.

Trainees check their cell phones and update family members at the Solomon Center on Fort Jackson in 2017. Photo by Robert Timmons, courtesy of the U.S. Army.

People also need to encounter mis- and disinformation and practice countering it in the media ecosystems they inhabit. Disinformation can appear in different forms to different people. Learning to debunk manipulative information isn’t just about verifying any one post. It’s about a broader understanding of how it is designed to manipulate us.

“If you and I were sitting in a classroom, and you looked up ‘sandwiches’ and I looked up ‘sandwiches,’ we might get very different things…,” Giles says. “That’s important when we’re looking at things that are more important than sandwiches.”

‘The Majority of People Feel Frustrated’

In the burgeoning field of countering misinformation, inoculation theory has inspired hope. The approach takes its name from the idea behind vaccines: Give someone a little piece of something harmful, and when it comes around again, they’ll recognize it and mount a stronger defense.

The idea originated in the 1960s, stemming in part from fears that U.S. troops fighting abroad might be susceptible to psychological persuasion by the enemy. William McGuire, a social psychologist who later became chair of the Yale psychology department, pioneered the idea of what he called “a vaccine for brainwash,” arguing that it’s easier to tackle a problem early than to scramble to root it out after it’s taken hold.

Today, the idea generally holds that pairing a warning about an incoming threat to a person’s belief system with information that refutes the messaging likely to be in that threat helps people recognize and reject manipulative information.

“The idea is repeatedly debunking after the fact doesn’t work. What you need to do instead is what’s known as pre-bunking,” Singer says. “Don’t wait for the person to become sick. Give them the ability to defend themselves.”

A checklist educates people about how to spot fake news from both traditional and social media news sources. Graphic by Master Sgt. Renae Pittman, courtesy of the U.S. Air Force.

Given the “everyday” nature of the problem, just having everyday conversations about it to increase awareness is important, Giles and others say.

“I really believe the majority of people really feel frustrated about just the abundance of low-quality information online,” Giles says. “A lot of people have those questions.”

Understanding how and when disinformation campaigns are likely to proliferate is an important part of “pre-bunking.” Wendoloski points to the upcoming midterm elections as an example. Disinformation campaigns often follow news cycles. Thinking about what is coming down the pipeline can give potential targets a chance to prepare.

“I’m not going to get into the weeds about the candidates, because I want to try to stay apolitical, but knowing that this stuff’s going to start getting thrown around there and the environment, and maybe thinking about how to have a conversation about a sensitive topic …” he says.

Countering misinformation also requires understanding the varied nature of the threat itself. Misinformation proliferates on many platforms: Instagram, Facebook, Twitter, Parler, Telegram, TikTok, WhatsApp, LinkedIn, Pinterest—the list goes on and on.

“There’s just all kinds of stuff,” Wendoloski says. “You’re a leader—‘I got social media. I use Facebook.’ But then your newer soldiers might be using something like Discord.”

That doesn’t mean everybody needs to be on every social media site, Wendoloski says. But taking misinformation seriously means taking the time to understand the platforms those around you use, the types of information—and misinformation—they may encounter, and the particular challenges they pose.

The U.S. Air National Guard used humor in its posters to encourage airmen to think and investigate before they believe a post on social media. Graphic by Senior Airman Adam Smith, courtesy of the U.S. Air National Guard.

Just this summer, for instance, Buzzfeed reported about leaked audio from TikTok that purported to show that Chinese engineers repeatedly accessed private data from U.S. users. And Newsguard, an organization that rates the reliability of online information, found that, in the aftermath of Russia’s invasion of Ukraine, TikTok showed new users misinformation about the war in less than 40 minutes—without users even searching for Ukraine videos. In other countries, TikTok users have masqueraded as legitimate politicians ahead of elections.

“Many Americans—U.S. service members included—have not been viewing TikTok as a national security threat,” FCC Commissioner Brendan Carr told lawmakers last month. “They consider it to be just another app for sharing funny videos or memes. But that’s the sheep’s clothing.”

That worry—that military members will view and share something that inadvertently furthers narratives designed to sow discord and division, undermining national security—is the reason Wendoloski and others see addressing mis- and disinformation in the ranks as so critical.

Our Journalism Depends on Your Support

“People in the military, you’re kind of formed around a common ethic or common values,” Wendoloski says. “You take an oath to support and defend the Constitution of the United States against enemies foreign and domestic. And potentially, you’re willing to share something, click the share button, and further an adversary’s message. That’s not what you signed up to do.”

This War Horse investigation was reported by Sonner Kehrt, edited by Kelly Kennedy, fact-checked by Ben Kalin, and copy-edited by Mitchell Hansen-Dewar. Headlines are by Abbie Bennett.

Comments are closed.