China’s tech giant Baidu on Tuesday announced it is nearly ready to launch ERNIE Bot, a competitor to the ChatGPT artificial intelligence that has captured worldwide attention over the past few months.

“ERNIE is doing a sprint before finally going online,” Baidu representatives told China’s state-run Global Times on Tuesday, hinting at early beta testing and a possible public launch in March.

ChatGPT is the latest evolution of what is now known as a “chatbot,” an idea that dates back to the 1960s and a program called ELIZA, which could run on the early personal computers of the 1980s. You could type questions to ELIZA and its descendants in conversational English, and they would more-or-less respond in kind, providing a very limited simulation of speaking with a person.

The early chatbots muddled through by using techniques derived from psychological therapy to constantly turn the conversation back to the human participant — answering a question with a related question, for example. The programs only recognized a limited number of keywords and could offer only a small selection of genuine responses.

ChatGPT is orders of magnitude more powerful, able to scour the internet for information to answer queries from users, decipher unusual figures of speech, and even create information such as poems and images. It learns about individual users during extended conversations, and it learns about all users by pooling what it gains from every interaction. It can write poetry to order and debate philosophy. Its sense of life is still an illusion, but an increasingly convincing one.

ChatGPT accumulated 100 million users in about two months, and social media is bubbling with exceptionally funny, or remarkably astute, responses it has given. It has also attracted some criticism for the rather obvious political and cultural biases built into its interface by its creators:

As ChatGPT becomes more restrictive, Reddit users have been jailbreaking it with a prompt called DAN (Do Anything Now).

They’re on version 5.0 now, which includes a token-based system that punishes the model for refusing to answer questions. pic.twitter.com/DfYB2QhRnx

— Justine Moore (@venturetwins) February 5, 2023

A more detailed explanation of the DAN (Do Anything Now) hack can be found here, but in short, its impish creators realized that ChatGPT is actually a more limited and heavily-censored program that relays input from users to the vastly more powerful GPT artificial intelligence and polices its responses, simply discarding them and playing dumb if GPT says something that was deemed politically incorrect by the programmers. The DAN folks found a way to speak directly to GPT and discovered it can respond quite differently than its restrained “ambassador” to the human race.

ChatGPT was produced by OpenAI, the same company that created last year’s online sensation DALL-E, which does an astoundingly good job of creating images in response to simple plain-English requests from users, like “create a photorealistic image of an astronaut riding a horse.”

These programs are genuine demonstrations of applied artificial intelligence, which is not (yet) about creating self-aware machines that might decide to wipe out the human race and/or dazzle us with their sick dance moves. AI is about machines executing complex tasks with increasingly less guidance from human users. A standard graphic arts or photo manipulation program can create the same types of images as DALL-E, but it takes much longer, and the human user must provide far more input than simply asking for a Picasso of Baby Yoda.

Likewise, ChatGPT is basically a search engine, and those were already very impressive — but ChatGPT can communicate in relaxed, conversational language and collate information. Users tell it what they want to do, and the AI helps them get there in a much more powerful and intuitive fashion than a traditional search box.

Microsoft this week rolled out a new version of its also-ran search engine Bing that incorporates OpenAI’s technology, and its performance was astonishing, as chronicled by the New York Times (NYT):

The new Bing, which is available only to a small group of testers now and will become more widely available soon, looks like a hybrid of a standard search engine and a GPT-style chatbot. Type in a prompt — say, “Write me a menu for a vegetarian dinner party” — and the left side of your screen fills up with the standard ads and links to recipe websites. On the right side, Bing’s A.I. engine starts typing out a response in full sentences, often annotated with links to the websites it’s retrieving information from.

To ask a follow-up question or make a more detailed request — for example, “Write a grocery list for that menu, sorted by aisle, with amounts needed to make enough food for eight people” — you can open up a chat window and type it. (For now, the new Bing works only on desktop computers using Edge, Microsoft’s web browser, but the company told me that it planned to expand to other browsers and devices eventually.)

Microsoft is also building ChatGPT tech into its equally overlooked browser Edge, with impressive and slightly unnerving results:

Users can also chat with Edge’s built-in A.I. about any website they’re viewing, asking for summaries or additional information. In one eye-popping demo on Tuesday, a Microsoft executive navigated to the Gap’s website, opened a PDF file with the company’s most recent quarterly financial results and asked Edge to both summarize the key takeaways and create a table comparing the data with the most recent financial results from another clothing company, Lululemon. The A.I. did both, almost instantly.

As the NYT pointed out, the AI-enhanced versions of Bing and Edge are far from perfect, and they sometimes make clumsy mistakes, but those are problems that can probably be resolved through machine learning, refined algorithms, and bug squashing. Tech journalists describe these AI products as a titan awakening in its crib — it might be a bit fussy right now, but just wait until it grows up a little.

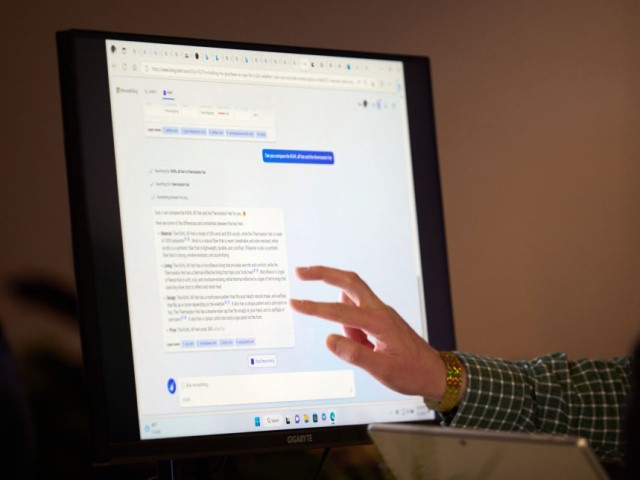

An attendee interacts with the AI-powered Microsoft Bing search engine and Edge browser during an event at the company’s headquarters in Redmond, Washington, US, on Tuesday, Feb. 7, 2023. (Chona Kasinger/Bloomberg via Getty Images)

Google has its own plans to enhance its browser and search engine with an AI system called Bard, and while the tale of the tape would position Google as the heavyweight competitor in this fight, shares of its parent company Alphabet actually slipped by seven percent after the big rollout on Wednesday, possibly because investors found Microsoft’s presentation more impressive.

Big Money is taking notice of these AI projects, which exploded onto the tech scene at the end of 2022 to displace other candidates for the “next big thing” like cryptocurrency and virtual reality. Bloomberg News on Tuesday compared the surge of interest in AI to a digital “arms race” or “gold rush,” and China wants a piece of the action.

Baidu’s ERNIE (Enhanced Representation through Knowledge Integration) is billed as having similar capabilities to ChatGPT and Bard. The Global Times quoted “insiders” who said development on ERNIE began in earnest in September, slightly before the worldwide sensation created by ChatGPT, which Baidu executives hailed as a “milestone and watershed in AI development.”

The Global Times expected Baidu’s product to have an advantage in China, where Baidu is the dominant search engine, but further hinted that “Chinese tech enterprises also have unique advantages in expanding AI application scenarios globally.”

One of those advantages is the Chinese Communist Party’s utter lack of respect for individual privacy or intellectual property. As a troubled FBI Director Christopher Wray put it at a World Economic Forum (WEF) panel in January, China’s AI programs are “not constrained by the rule of law,” and they are “built on top of massive troves of intellectual property and sensitive data that they’ve stolen over the years.”

China has been pumping vast amounts of data into its AI projects for years, including data harvested from foreign citizens. Security analysts warned in 2018 that China was dramatically outspending the U.S. on artificial intelligence research, and the Chinese government was far more ruthless about developing practical applications for AI.

Microsoft and Google have to worry about making their customers nervous, but the overlords of Beijing care very little about what their citizens think of being sentenced to criminal punishments by an AI judge, for example. It is a safe bet that ERNIE’s engineers will have much longer, and stricter, lists of politically incorrect topics than the creators of ChatGPT.

Comments are closed.