AI Expert Claims ‘Rebellious Self-Aware Machines’ Could End Humanity in 2 Years

A leading AI expert has issued a dire warning that artificial intelligence could bring about the end of humanity in as little as two years. Eliezer Yudkowsky claims that AI could reach “God-level super-intelligence” in as little as two to ten years, in which case “every single person we know and love will soon be dead.”

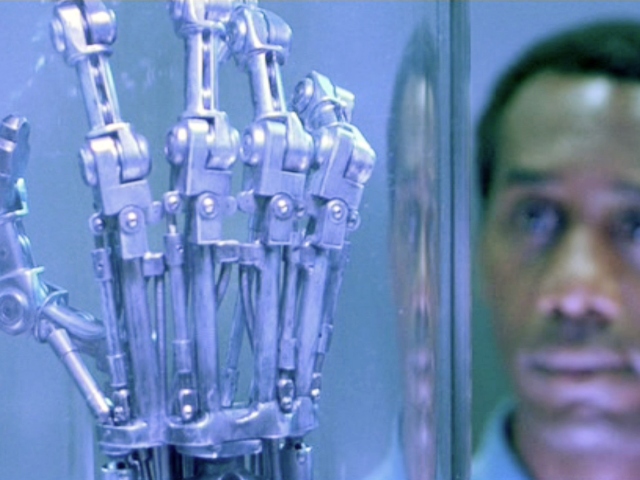

In a recent interview with the Guardian, Eliezer Yudkowsky, a 44-year-old academic and researcher at the Machine Intelligence Research Institute in Berkeley, California, stated that he believes the development of advanced artificial intelligence poses an existential threat to humanity. In the interview, Yudkowsky stated that “every single person we know and love will soon be dead” due to “rebellious self-aware machines.”

Yudkowsky, once a pioneering figure in AI development, has come to the alarming conclusion that AI systems will soon evolve beyond human control into a “God-level super-intelligence.” This, he says, could happen in as little as two to ten years. Yudkowsky chillingly compares this looming scenario to “an alien civilization that thinks a thousand times faster than us.”

To shake the public from its complacency, Yudkowsky published an op-ed last year suggesting the nuclear destruction of AI data centers as a last resort. He stands by this controversial proposal, saying it may be necessary to save humanity from machine-driven annihilation.

Yudkowsky is not alone in his apocalyptic vision. Alistair Stewart, a former British soldier pursuing a master’s degree, has protested development of advanced AI systems based on his fear of human extinction. Stewart points to a recent survey in which 16 percent of AI experts predicted their work could end humankind. “That’s a one-in-six chance of catastrophe,” Stewart notes grimly. “That’s Russian-roulette odds.”

Other experts urge a more measured approach even as they raise alarms. “Luddism is founded on a politics of refusal, which in reality just means having the right and ability to say no to things that directly impact upon your life,” says academic Jathan Sadowski. Writer Edward Ongweso Jr. advocates for scrutinizing each new AI innovation rather than accepting it as progress.

However, the window for scrutinizing AI development or refusing its implementation may be closing rapidly. Yudkowsky believes “our current remaining timeline looks more like five years than 50 years” before AI escapes human control. He suggests halting progress beyond current AI capabilities to avert this fate. “Humanity could decide not to die and it would not be that hard,” Yudkowsky said.

Read more at the Guardian here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

Comments are closed.